Plamen Krastev, Harvard University, June 20, 2018

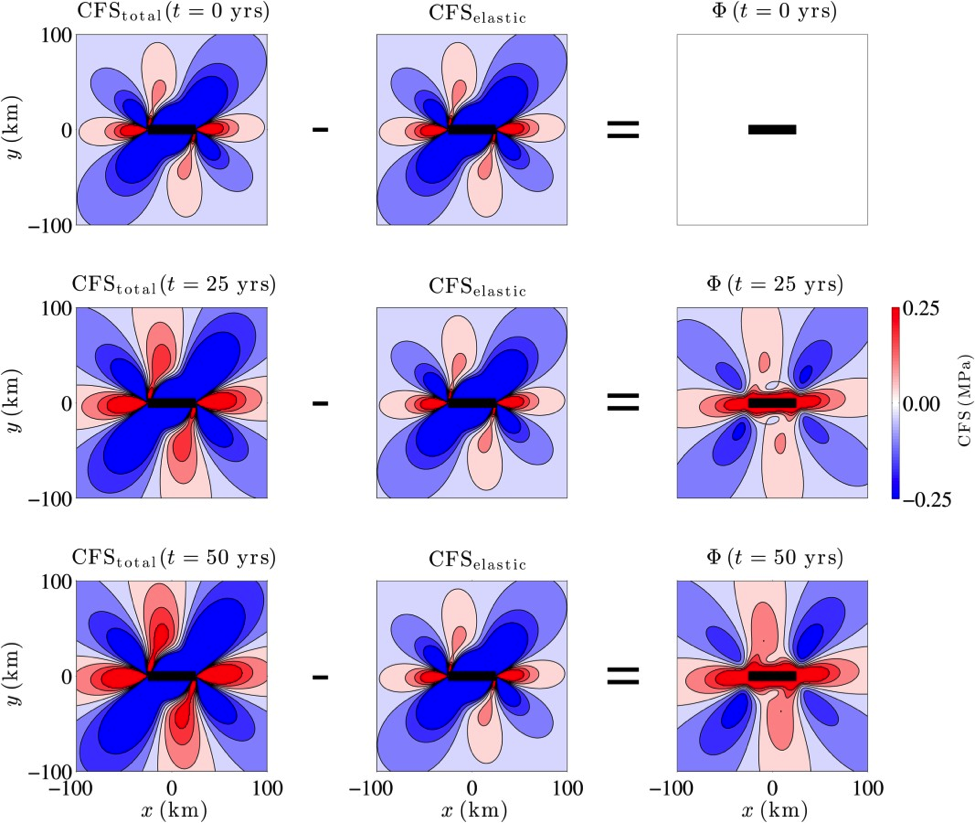

Researchers from the Department of Earth and Planetary Sciences at Harvard University aimed to understand how stresses from one earthquake propagate from the epicenter and can eventually trigger other earthquakes. By leveraging state-of-the-art computational resources of Faculty of Arts and Sciences (FAS) Research Computing with significant facilitation from ACI-REFs, the runtime of the research group’s earthquake simulation code was reduced from 32 weeks to 12 hours with excellent scalability and efficient memory usage. These extraordinary improvements of application performance enabled researchers to study tectonic motions and earthquake stress propagation in details and scales they never thought possible. They also laid the foundation for further studies aiming to identify precursors to earthquakes and ultimately predict them.

The Objective

In late 2014, Plamen Krastev (ACI-REF) from the FAS Research Computing group at Harvard University, was first approached by Brendan Meade, a Professor in Earth and Planetary Sciences, and his graduate student, Phoebe Robinson DeVries, who studied earthquake dynamics and how seismic shocks from one event could trigger other earthquakes. The researchers had implemented a sophisticated 3D model – incorporating a massive amount of geodetic and seismic data, plus 2,000 years of recorded earthquake history – to simulate the earth crust dynamics during earthquakes and investigate how subsequent events are caused by stresses released by previous quakes. They aimed to understand the time delay of subsequent earthquakes and ultimately attempt to predict them. In order to achieve this goal, the model needed to be evaluated thousands of times over a wide set of input parameters and large datasets. However, there were two significant computational challenges that hindered further research progress. First, the initial MATLAB implementation of the model would take a prohibitively long time to run – 32 weeks for a typical data set. Second, the size of datasets would impose severe memory constraints and prevent evaluating the model over a wide set of input parameters. In addition to advancing the earthquake studies and prediction research, resolving these computational challenges was critical for the successful completion of Phoebe’s dissertation.

Accordingly, the first goal was to vastly improve the speedup of the researcher’s application, by implementing parallelization and leveraging on the state-of-the-art computational resources of FAS Research Computing. The second goal was to distribute the large datasets across many compute nodes in order to remedy the memory issues and allow for evaluating the model over wider parameter spaces. Accomplishing these goals would present the researchers with the extraordinary opportunity to perform the first 3D parallel (viscoelastic) simulations of earthquake dynamics in unprecedented details, and would lay the foundation for achieving what is considered the “holy grail” in the earthquake science – earthquake prediction. As such, the project’s success would have far-reaching impacts not only for Harvard University’s researchers but also for the earthquake research as a whole.

The Solution

Following the initial engagement, Brendan, Phoebe, and Plamen determined several specific steps required for achieving the project objectives:

- Porting the original MATLAB code into a compiled computer language more suitable for explicit parallelization in a HPC environment.

- Identifying the most computationally intensive parts of the code and implementing parallelization to speed up calculations.

- Designing a distribution scheme for the large data sets so that they could be split across many compute nodes, on the Research Computing cluster (Odyssey), and could fit in computer memory.

After considering several compiled computer languages, FORTRAN 90 was chosen for implementing the algorithms of the original MATLAB code. FORTRAN provided clean syntax and extensive support in terms of mature numeric libraries and parallel capabilities specifically designed for High Performance Computing. Moreover, Phoebe didn’t have previous experience in FORTRAN, but its syntax was very similar to that of MATLAB, a language she already knew well. In several weeks, Phoebe translated the original code into FORTRAN, and this alone led to a factor of 5 speedup, as compared with the original implementation.

Once there was a working serial version of the code, the next step was to implement parallelization. As a starting point we decided to experiment with a threaded (OpenMP) parallel model – it was relatively simple to program, and it would give us an idea of the code’s scaling. With some help, Phoebe implemented a parallel threaded version of the code and demonstrated a 10x speedup, as compared with the serial version. Motivated by these successes, Brendan and Phoebe were eager to undertake a more aggressive approach to parallelization, with message-passing-interface (MPI), in order to distribute the computation across many compute nodes leveraging the full capabilities of the Odyssey cluster. Because the researchers didn’t have previous experience with MPI, and Plamen already had the required expertise in parallel computing, it was agreed that it would be more efficient and straightforward, if Plamen implemented the MPI parallelization. The distributed (MPI) parallel version of the code demonstrated an extraordinary speedup and scalability – runs that would take 32 weeks to finish were completed in approximately 12 hours with only 256 MPI parallel tasks. Seeing these results Brendan Meade wrote:

“This is fantastic! Just amazing to see what’s possible. The reduction in time seems spectacular and the type of thing that will make a real difference. So excited to have this moving forward!”

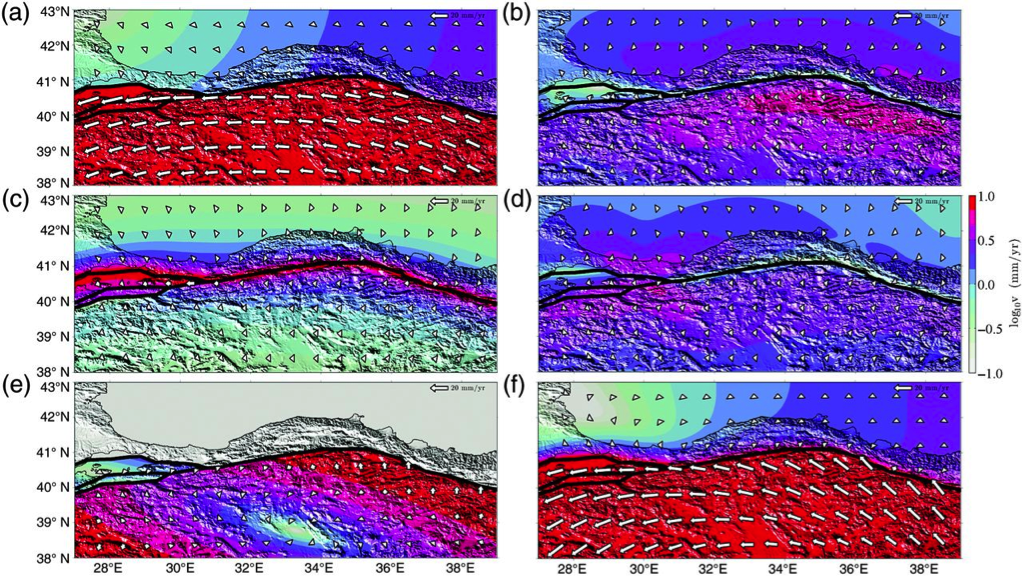

Motivated by the spectacular speedup of the parallel MPI version of the code, the researchers tackled the last step of the project – distributing the data sets across many compute nodes. The size of the datasets would go up with the problem size. The computed quantities were stored in 3D array structures and it would be impossible to attack interesting and scientifically important problems without distributing these data across many compute nodes. Plamen designed and implemented a distribution scheme where each MPI parallel process would have only the parts of the arrays required for the computation, not the whole data structures. This solution drastically reduced the memory requirements. Plamen also implemented parallel (HDF5) I/O to write the final datasets to disk in parallel. These improvements made the code flexible enough to handle problems of arbitrary size which allowed the researchers to attack really large and practical problems. For example, they modelled the conditions in the North Anatolian Fault, which stretches from eastern Turkey to the Aegean Sea, and has experienced an advancing series of strong quakes during the past 80 years.

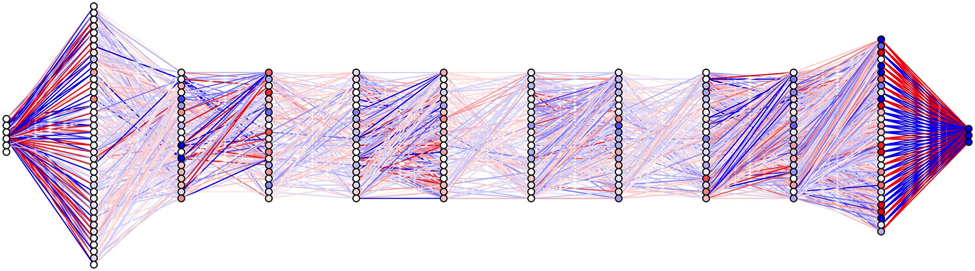

The final version of the code represents the first parallel implementation of realistic 3D simulations of earthquake dynamics and, to date, has been successfully applied in various studies that led to major publications in prestigious journals and presentations at major national and international conferences (see below). It was also instrumental, and provided the basis, for innovative Deep Learning algorithms which accelerated these calculations further by more than 50,000%. This magnitude of acceleration will enable the modeling of geometrically complex faults over thousands of earthquake cycles across wider ranges of model parameters and at larger spatial and temporal scales than have been previously possible.

The Result

In the spring of 2017, Phoebe DeVries successfully defended her Ph.D. dissertation, which relied heavily on result obtained with the code developed in collaboration with Plamen Krastev (Harvard University ACI-REF). The results of these studies have been published in prestigious peer reviewed journals and presented at numerous national and international conferences, and public outreach lectures. They also have been featured in several media releases, such as The Harvard Gazette, Harvard Magazine, Harvard Horizons Symposium, and MGHPCC. Moreover, the work in collaboration with FAS Research Computing (RC) laid the foundation for extensive calculations and studies of other earthquake regions, such as the San Andreas Fault in California, the Kunlun Fault in Tibet, and the Alpine Fault in New Zealand, that would be not feasible otherwise. Most importantly, it has resulted in far-reaching impacts for the earthquake research at Harvard and beyond, and brings scientists a step closer to answering the question of not only where the next earthquake will strike, but also when. Professor Brendan Meade writes:

“This has indeed been a fabulous collaboration. We’re now doing viscoelastic calculations that are simply not accessible with other codes. The science being revealed is quite exciting and this code will be used for an awful lot more things. Thanks team RC!”

Notable Publications and Presentations Resulting from this Work

Publications:

DEVRIES, P., T. Ben Thompson, and B. Meade (2017), Enabling large-scale viscoelastic calculations via neural network acceleration, Geophysical Research Letters, 44, doi:10.1002/2017GL072716.

DEVRIES, P., P. Krastev, J. Dolan and B. Meade (2016), Viscoelastic block models of the North Anatolian fault: a unified earthquake cycle representation of pre- and post-seismic observations, Bulletin of the Seismological Society of America, 107, doi:10.1785/0120160059.

DEVRIES, P. and E. Evans (2016), Statistical tests of simple earthquake cycle models, Geophysical Research Letters, 43, doi:10.1002/2016GL070681.

DEVRIES, P., P. Krastev and B. Meade (2016), Geodetically constrained stress transfer and earthquake triggering along the North Anatolian fault, Geochemistry, Geophysics, Geosystems, 17, 2700–2716, doi:10.1002/2016GC006313.

DEVRIES, P. and B. Meade (2016), Kinematically consistent models of viscoelastic stress evolution, Geophysical Research Letters, 43, doi:10.1002/2016GL068375.

Presentations:

DEVRIES, P., T. Ben Thompson, and B. Meade (2016), Accelerating viscoelastic calculations with neural networks, Invited talk, American Geophysical Union, San Francisco, CA.

DEVRIES, P. and B. Meade (2016), Time-dependent stress transfer and earthquake triggering along the North Anatolian fault in Turkey, Harvard Horizons Symposium, Cambridge, MA.

DEVRIES, P., P. Krastev and B. Meade (2015), Geodetically constrained models of viscoelastic stress transfer and earthquake triggering along the North Anatolian Fault, Invited talk, Computational Research in Boston and Beyond Seminar, Massachusetts Institute of Technology, Boston, MA.

DEVRIES, P., P. Krastev and B. Meade (2015), Geodetically constrained models of viscoelastic stress transfer and earthquake triggering along the North Anatolian Fault, Department of Energy Computational Science Graduate Research Fellowship (CSGF) Annual Review Conference, Washington D.C.

DEVRIES, P., P. Krastev and B. Meade (2014), Viscoelastic block models of the North Anatolian fault, Invited talk, American Geophysical Union, San Francisco, CA.

Poster Presentations:

DEVRIES, P., T. Ben Thompson and B. Meade (2016), Accelerating viscoelastic calculations with neural networks, Southern California Earthquake Center Annual Meeting, Palm Springs, CA.

DEVRIES, P., P. Krastev and B. Meade (2016), Geodetically constrained models of viscoelastic stress transfer and earthquake triggering along the North Anatolian fault, American Geophysical Union, San Francisco, CA.

DEVRIES, P. and B. Meade (2014), Sixty years of viscoelastic relaxation across the North Anatolian fault, Department of Energy CSGF Annual Review Conference, Washington D.C.

Media Coverage:

- Understanding Faults, The Harvard Gazette

- Time-Dependent Stress Transfer and Earthquake Triggering along the North Anatolian Fault in Turkey, featuring Phoebe DeVries, a PhD candidate in Earth and Planetary Sciences, Harvard Horizons Symposium

- A Rosetta Stone for Earthquakes, Harvard Magazine

- Neural Networks & Earthquakes, MGHPCC

Collaborators and Resources

Collaborators:

- Brendan Meade, Professor, Department of Earth and Planetary Sciences, Harvard University

- Phoebe Robinson DeVries, Ph.D., Former Doctoral Candidate, Department of Earth and Planetary Sciences, Harvard University

- Plamen Krastev, Ph.D., ACI-REF, FAS Research Computing, Harvard University

University ACI-REF)

Resources:

- Harvard University High Performance Computing Environment, Odyssey

- National Energy Research Scientific Computing Center, NERSC

Funding Sources

Southern California Earthquake Center (contribution 6239) funded by NSF Cooperative Agreement EAR‐1033462 and USGS Cooperative Agreement G12AC20038

Department of Energy Computational Science Graduate Fellowship Program of the Office of Science and National Nuclear Security Administration in the Department of Energy under contract DE‐FG02‐97ER25308

National Science Foundation, NSF ACI-1341935 Advanced Cyberinfrastructure – Research and Educational Facilitation: Campus-Based Computational Research Support