Lauren Michael, University of Wisconsin – Madison, June 20, 2018

Researchers in Dane Morgan’s materials research group at UW-Madison sought to complete a large, community database of single-atom diffusions across a wide range of materials via a workflow of many multi-core simulations using the popular VASP simulation software for materials simulations. By leveraging high-throughput computing systems with significant facilitation from ACI-REFs, the computational work for the 5-year project was reduced to a little over one year. To date, the project’s need for the scalability of high-throughput computing and technology-sharing enabled by campus ACI-REFs, have enabled a number of additional campus researchers to tackle other VASP-dependent research problems at scales they never thought possible.

The Objective

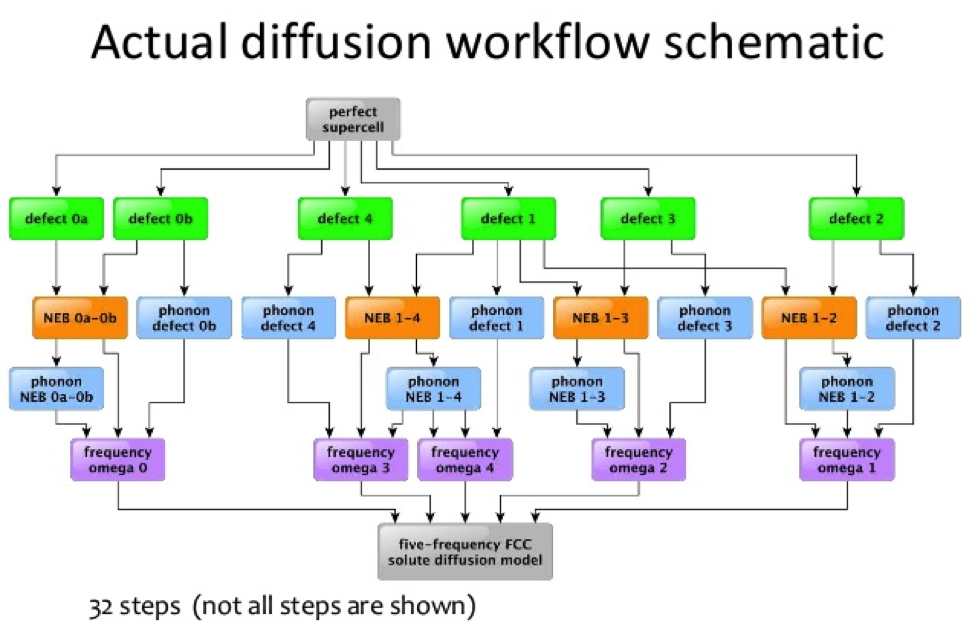

In late 2013, Lauren Michael (ACI-REF) at UW-Madison’s Center for High Throughput Computing (CHTC) was first approached by graduate student (and CHTC user) Tam Mayeshiba of Dane Morgan’s research group, who was planning an extreme amount of computation to produce the largest database of single-atom diffusion coefficients in worldwide community resource for materials scientists. Tam and Henry Wu, another graduate student working on the project, would need to repeat a complex workflow of thousands of multi-core simulations (see Figure 1) using the Vienna Ab initio Simulation Package (VASP) software for each combination of single atom and host material. However, the workflow and necessary VASP software presented several difficulties in scaling beyond a typical HPC cluster configuration to CHTC’s vast high-throughput computing (HTC) capacity, and Tam had determined that the use of HPC cluster infrastructures available for the project (including those at CHTC, XSEDE, and the group’s own cluster) did not include enough capacity to complete the work in a reasonable amount of time.

Seeing a tremendous opportunity, not just for Tam’s project, but for the numerous other campus users of the VASP simulation software, Lauren pulled in Greg Thain (HTCondor developer and local expert in message-passing-interface (MPI) job execution in HTC environments), who could help determine the important barriers and tasks needed to execute the workflow on CHTC’s HTC System, which includes a large local HTCondor cluster, HTCondor-enabled backfill of multiple other cluster’s on campus, and the vast Open Science Grid (OSG) of backfill capacity at over 100 large-scale computing sites across the country. If these resources could be leveraged at scales typical for other CHTC users, Tam’s project and those of other multi-core HTC users could be completed and expanded in far less time, and the work to adapt VASP for HTC would likely help many other researchers to achieve similar impacts to their projects.

The Solution

Together, Tam, Lauren, and Greg determined several objectives to tackle so that the project could be automated across compute systems (CHTC and others) with HTC-amenable VASP simulations and improved scalability/project turnaround for this and other VASP-dependent projects:

(1) Create a portable VASP installation that need not execute on the same filesystem where it was first installed (a known barrier for VASP portability to distributed HTC systems that function without a shared filesystem).

(2) Implement self-checkpointing into each VASP simulation, so that a simulation job can be readily restarted if interrupted during a backfill execution.

(3) Refactor the group’s existing MAterials Simulation Toolkit (MAST) workflow manager to integrate with HTCondor’s DAGMan workflow feature, for maximum automation across the project.

(4) Address the above, especially #1, in a way that could be adopted by other researchers on campus, and determine a way to identify such researchers so that they, too, can benefit from HTC scalability for VASP-dependent research.

After considering various options and VASP dependency barriers with help from Greg and Lauren, Tam set out to use the CDE application virtualization tool to create a self-contained VASP installation that would execute when transferred by HTCondor to various job execution servers. She also worked with Lauren and Greg to understand HTCondor’s support for self-checkpointable jobs, and implemented a self-checkpointing job execution script that would resume a simulation from intermediate data after HTCondor-orchestrated job interruption and rescheduling, when running as backfill.

During Tam’s testing and refinement of the self-checkpointing VASP job features, Greg and Lauren determined that Tam’s throughput for 16-core and 20-core jobs (the minimum number of cores necessary for Tam’s simulations to complete a checkpoint with low backfill interruption rates) was significantly lower than single-core and few-core jobs. Therefore, Greg proposed and tested changes to HTCondor settings that would more readily drain the largest servers to accommodate multi-core slots, sooner (for Tam’s jobs and other multi-core jobs). To date, these changes have enabled many dozens of researchers with single-server, multi-core jobs to achieve more significant turnaround on CHTC’s local HTCondor cluster, even compared to CHTC’s MPI-optimized HPC Cluster.

Seeing sufficient turnaround and a much faster time-to-completion for the project, Tam tackled the final step of full automation across multiple compute infrastructures (CHTC and other capacity available to the project and pursued more heavily by Henry) by integrating the Morgan research group’s MAST toolkit with HTCondor’s existing DAGMan workflow manager.

Beyond the impact for Tam and Henry’s diffusion database project, the work accomplished with an HTC-portable implementation of VASP could be applied to other projects. Lauren and fellow Research Computing Facilitator, Christina Koch (also ACI-REF), have already identified more than a dozen researchers on campus who have begun using the VASP-CDE implementation and self-checkpointing job scripts on CHTC’s HTC System. Prior to Tam’s PhD completion, Lauren and Christina arranged joint meetings with Tam and known users of VASP with numerous simulations running on CHTC’s HPC Cluster. Given Tam’s familiarity with the VASP software and vocabulary of research projects leveraging it, she would demonstrate the functionality of the self-checkpointing VASP-CDE setup. After Tam’s PhD completion, this peer experience-sharing role has been happily filled by Ahmed Elnabawy, an early adopter and heavy user of the VASP-CDE and self-checkpointing job tools, who works within the group of Professor Manos Mavrikakis in the Department of Chemical and Biological Engineering. A number of other researchers and groups have benefitted, as a result (see below).

The Result

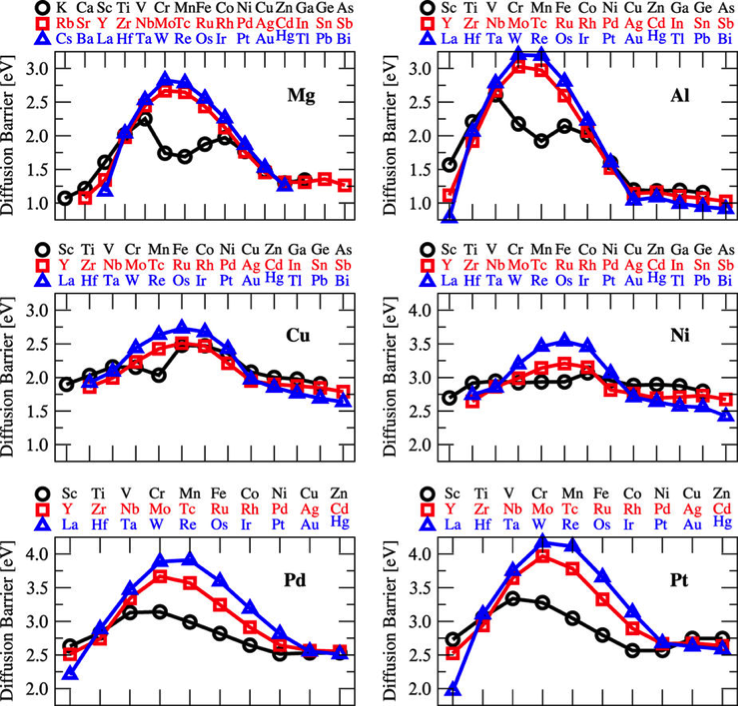

By early 2016, Tam and Henry had completed and published work on the open, online UW CMG Dilute Solute Diffusion Database of more than 260 dilute solute diffusion systems in Mg, Al, Cu, Ni, Pd, Pt, and W, which required many thousands of VASP simulations. Using the remainder of the project funding period, the group has continued to grow the database, adding usability features and built-in visualizations (Figure 2), have created software tools that experimental materials researchers can use on the diffusion data from the database, and have expanded the usability and applicability of the MAST toolkit for adoption by other materials scientists. To date, the database is the largest collection of computed diffusion data, making it a tremendous resource for the materials research community, and a significant leap in the effort to design a variety of societally-impactful materials, including semiconductors and nuclear reactor metals, from otherwise incomplete experimental data. Furthermore, the work was completed in a fraction of the time planned within the 5-year funded project. According to a statement from professor Morgan in a UW-Madison story about the project:

“Computation can generate vast amounts of good quality data at a fraction of the cost or time to perform experiments … In some cases, they can be equally good — if not better than — experimental values, because we can perform the calculations at low temperature and we avoid many of the challenges of the experimental analysis.”

Most importantly, the work required to achieve such an accomplishment has resulted in far-reaching impacts to CHTC’s support of multi-core jobs across numerous other research domains (from the life sciences to the physical sciences) and has improved the ability of other researchers to run VASP on CHTC’s HTC System for various materials and chemical engineering applications, with greater scalability than achievable on many traditional HPC configurations. The list of researchers benefitting from the VASP-CDE and self-checkpointing tools on CHTC’s HTC System, to date, includes (among others):

- Saurabh Bhandari, studying heterogeneous catalytic reaction systems, including vapor phase decomposition of formic acid (FA) with transition metal catalysts, a major step of biomass reforming in the renewable production of hydrogen.

- Benjamin Chen, studying energetics of formic acid decomposition on gold nanoclusters via Monte Carlo simulations and parameterized representations of species interactions.

- Ahmed Elnabawy, studying catalysis reaction intermediates on metal and nonmetal surfaces for a variety of fuel cell applications.

- Florian Goeltl, studying properties of transition metal exchanged zeolites in the conversion of methane to methanol, a highly important reaction in the combustion of natural gas, who notes, “Answering these questions at a theoretical level allows experimental researchers to optimize catalyst synthesis, preparation and reaction conditions for ideal results.”

- Thomas Kropp, studying oxidation of carbon monoxide on graphene-based catalysts, for more efficient carbon monoxide degradation at low temperatures, who notes, “Due to the large number of reaction intermediates that have to be considered, this project would not be possible without the HTC cluster.”

- Ying Ma (and research group at University of Wisconsin-Eau Claire), studying various aspects of lithium sulfide battery design, including steady-state crystal and molecular structures, and reactions between battery solution components.

- Sean Tacy, studying promotion of mercury partitioning between gas and particle phases on aerosol surfaces (e.g., iron oxides) for catalytic applications and understandings of conditions for mercury handling, who notes, “Without access to these resources, the progress made on this project would be significantly limited.”

- Roberto Schimmenti, studying catalytic properties of supported sub-nanometer metal clusters toward new strategies for improving the selectivity towards hydrogen production in biomass upgrading technologies.

- Lang Xu, studying reaction features of the hydrodechlorination hydrocarbon compounds over transition metal catalysts toward the rational design of numerous catalytic materials for hydrochlorination applications.

Notable Publications and Presentations Resulting from this Work

T. Angsten, T. Mayeshiba, H. Wu, D. Morgan. “Elemental vacancy diffusion database from high-throughput first-principles calculations for fcc and hcp structures.” New Journal of Physics 16, 015018 (2014).

H. Wu, T. Mayeshiba, D. Morgan. “High-throughput ab-initio calculations automated by the MAterials Simulation Toolkit (MAST).” Poster, Future Technologies in Automated Atomistic Simulations, CECAM-HQ-EPFL in Lausanne, Switzerland (2015).

H. Wu, T. Mayeshiba, D. Morgan. “High-throughput ab-initio dilute solute diffusion database.” Nature Scientific Data 3, 160054 (2016).

T. Mayeshiba, H. Wu, Z. Song, B. Afflerbach, D. Morgan. “The MAterials Simulation Toolkit for Defect and Diffusion Calculations”. Presentation, NISD/CHiMaD Data Workshop, Evanston, IL (2016).

D. Morgan. “HTC for Materials Databases and Materials Design.” HTC Showcase presentation, Open Science Grid User School, Madison, WI (2016 and 2017).

F. Göltl, P. Müller, P. Uchupalanun, P. Sautet, and I. Hermans. “Developing a Descriptor-Based Approach for CO and NO Adsorption Strength to Transition Metal Sites in Zeolites.” Chemistry of Materials 29, 6434 (2017).

M. Zhao, A. O. Elnabawy, M. Vara, L. Xu, Z. D. Hood, X. Yang, K. D. Gilroy, L. Figueroa-Cosme, M. Chi, M. Mavrikakis, and Y. Xia. “Facile Synthesis of Ru-Based Octahedral Nanocages with Ultrathin Walls in a Face-Centered Cubic Structure.” Chemistry of Materials 29, 9227 (2017).

K. D. Gilroy, A. O. Elnabawy, T.-H. Yang, L. T. Roling, J. Howe, M. Mavrikakis, and Y. Xia. “Thermal Stability of Metal Nanocrystals: An Investigation of the Surface and Bulk Reconstructions of Pd Concave Icosahedra.” Nano Letters 17, 3655 (2017).

M. Vara, L. T. Roling, X. Wang, A. O. Elnabawy, Z. D. Hood, M. Chi, M. Mavrikakis, and Y. Xia. “Understanding the Thermal Stability of Palladium-Platinum Core-Shell Nanocrystals by In Situ Transmission Electron Microscopy and Density Functional Theory.” ACS Nano 11, 4571 (2017).

R. Schimmenti, R. Cortese, D. Duca, and M. Mavrikakis. “Boron Nitride-supported Sub- nanometer Pd 6 Clusters for Formic Acid Decomposition: A DFT Study.” ChemCatChem 9, 1610 (2017).

S. Rangarajan and M. Mavrikakis. “On the Preferred Active Sites of Promoted MoS 2 for Hydrodesulfurization with Minimal Organonitrogen Inhibition.” ACS Catalysis 7, 501 (2017).

Supporting Media

Collaborators and Resources

Collaborators

- Tam Mayeshiba, Graduate Research Assistant, Department of Materials Science and Engineering, University of Wisconsin-Madison

- Henry Wu, Graduate Research Assistant, Department of Materials Sciences and Engineering, University of Wisconsin-Madison

- Dane Morgan, Professor, Department of Materials Sciences and Engineering, University of Wisconsin-Madison

- Ahmed Elnabawy, Graduate Research Assistant, Department of Chemical and Biological Engineering, University of Wisconsin-Madison

- Lauren Michael, Research Computing Facilitator, Center for High Throughput Computing, University of Wisconsin-Madison

- Greg Thain, Software Engineer, Center for High Throughput Computing, University of Wisconsin-Madison

- Miron Livny, Professor of Computer Sciences and Director, Center for High Throughput Computing, University of Wisconsin-Madison

- Aaron Moate, Systems Administrator, Center for High Throughput Computing, University of Wisconsin-Madison

- Nathan Yehle, (former) Systems Administrator, Center for High Throughput Computing, University of Wisconsin-Madison

- Christina Koch, Research Computing Facilitator, Center for High Throughput Computing, University of Wisconsin-Madison

- Numerous additional researchers who have benefitted from the portable VASP-CDE implementation (see above).

Resources Leveraged

- Center for High Throughput Computing – http://chtc.cs.wisc.edu

- CDE – http://www.pgbovine.net/cde.html

- HTCondor Software Project – https://research.cs.wisc.edu/htcondor/

- MAterials Simulation Toolkit (MAST)- https://arxiv.org/abs/1610.00594

- Open Science Grid- https://opensciencegrid.org/

- UW CMG Dilute Solute Diffusion Database – http://diffusiondata.materialshub.org/

- Vienna Ab initio Simulation Package (VASP) – https://www.vasp.at/

- XSEDE – https://www.xsede.org/

Funding Sources

SI2-SSI: Collaborative Research: A Computational Materials Data and Design Environment (NSF 1148011).

NSF Graduate Fellowship Program (DGE-0718123).

UW-Madison Graduate Engineering Research Scholars Program.

The HTCondor Project and the Center for High Throughput Computing (numerous NSF, DOE, and UW-Madison funds).

Open Science Grid (NSF 1148698; DOE Office of Science).

Advanced Cyberinfrastructure – Research and Educational Facilitation: Campus-Based Computational Research Support (NSF 1341935).

Numerous additional projects and funding of researchers who have benefitted from the portable VASP-CDE implementation.