Plamen Krastev, Harvard University, August 29, 2018

Researchers from the Department of Earth and Planetary Sciences at Harvard University investigated how tropical air convection interacts with the global flow and, in particular, how it affects the rainfall distribution and its variability. These are important issues to society. Variations in the Asian monsoon rain, for example, can bring droughts or floods and affect the lives of billions of people. ACI-REFs worked closely with researchers and helped to significantly improve the efficiency and scalability of their climate modeling and forecasting code. These improvements enabled the research group’s code to run an order of magnitude faster – typical runs were completed in 10 hours instead of 160 hours – and paved the way to more detailed and reliable weather forecasting. The lessons learned also helped researchers to achieve similar enhancement of application performance of other weather simulation codes widely used by the climate modeling community and as such have resulted in far-reaching impacts for the climate modeling research at Harvard and beyond.

The Objective

In September 2016, Zhiming Kuang, a Professor of Atmospheric and Environmental Science in the Department of Earth and Planetary Sciences, and his postdoctoral associate, Giuseppe Torri, approached Faculty of Arts and Sciences (FAS) Research Computing at Harvard University to discuss the possibilities of improving the efficiency and scaling of the Weather Research and Forecasting (WRF) Model on the Harvard’s Odyssey cluster. WRF was the primary computational tool used by researchers to understand the interaction between air and water convection and the large-scale circulation in the Tropics, an outstanding topic of major importance for geographic regions where droughts and floods frequently disrupt lives of billions of people. The researchers had designed a state-of-the-art climate model – including a huge amount of historic climate data from key weather stations in the Tropics – to simulate the convection dynamics and study how it affects the rainfall distribution and its variety. In order to address these important questions, the research group had scheduled a large number of simulations with WRF over a large set of input parameters and with increased model dimensions and resolution than usual. However, WRF would take too long to complete – about 160 hours for a typical run over the targeted parameter space. Resolving these computational challenges was critical for achieving project’s research goals. Additionally, it was also of primary importance for the success of Giuseppe’s postdoctoral research.

The major objective, accordingly, was to significantly reduce the runtime of WRF, by optimizing the computational workflow and leveraging on the cutting-edge computing resources of FAS Research Computing. Achieving this goal would allow the researchers to study convection weather patterns in the Tropics in much greater details, and would pave the road to a faster and more reliable weather forecasting in geographic regions where a prompt and accurate weather forecast could potentially save many lives. In addition, the experience gained by optimizing and running WRF could help researchers to achieve similar improvements of application performance of other climate modeling computational tools widely used by the climate modeling community. Therefore, the project’s success would have important impacts not only for researchers at Harvard University but also for the climate-modeling research worldwide.

The Solution

Together, Zhiming, Giuseppe, and Plamen (Harvard’s ACI-REF) determined two specific objectives to tackle to speed up computations with WRF:

(1) Compiling WRF with optimized compiler options for the target hardware architecture, and determining an optimal job-configuration for production runs.

(2) Optimizing the application’s I/O by migrating the workflow to a high-performance parallel filesystem.

Zhiming Kuang’s research group had recently acquired new dedicated hardware consisting of modern compute nodes with Intel Broadwell chip architecture and researchers were eager to start using this resource for their computations. Previously compiled applications however had very poor performance on the new hardware – application runs were orders of magnitude slower compared to runs on the research group’s older queues. WRF was no exception – runs on the new nodes were extremely slow as well. The new Intel Broadwell chips introduced some important changes to their microarchitecture making them superior to the previous generation hardware. However, to tap the full compute power of the Broadwell CPUs, applications needed to be recompiled with optimized compiler options specific to the target hardware. Plamen undertook the task to rebuild WRF and test it extensively on the new dedicated queue. This also included rebuilding several key software dependencies used by WRF, such as Message Passing Interface (MPI) and I/O (HDF5 and NetCDF) libraries. The newly compiled version of the code demonstrated improved speedup and scalability – runs that would take 160 hours to finish on the old hardware were completed in approximately 40 hours, a factor of 4 faster.

Motivated by this success, the researchers were ready to take the next step toward speeding up WRF – migrating their research data to a high performance parallel file-system to take advantage of parallel I/O for even faster processing.The research lab data was hosted on a legacy storage server and this situation represented a significant bottleneck for two main reasons. First, the filesystem on the legacy storage server was intrinsically serial, which didn’t allow for faster parallel I/O. Second, the storage server and the new Intel compute nodes were physically located in two distinct data centers 100 miles away from each other and “topology” affected application performance – data would fly between the two data centers saturating the network connection and creating a tremendous communication overhead and computational inefficiency. FAS Research Computing facilitated the migration of the research lab’s data to a high performance parallel file-system (Lustre) located at the same data center hosting the dedicated new compute nodes, thus resolving the I/O inefficiencies.

The combination of these improvements resulted in a order of magnitude acceleration of weather simulations compared to ones using the previous application setup. Seeing these results Giuseppe Torri wrote:

“I checked the outputs just now and the model has already run ~13 days, which is an order of magnitude faster than what I had managed to get.”

These successes allowed the researchers to complete the scheduled simulation runs as planned and the results of these studies have been published in prestigious journals and reported at major national and international conferences (see below).

The Result

The fruitful collaboration between FAS Research Computing and Zhiming Kuang’s research group led to significant improvement of performance of WRF in terms of speedup and scalability, and this helped enormously the PI’s postdoctoral associate, Giuseppe Torri, to succeed in his research. He wrote several highly visible publications and reported his work at prestigious national and international conferences. Moreover, this work helped advancing the understanding of air and water convection and its interaction with global flow patterns, which is critical for prompt and accurate forecasting of weather in tropical geographic regions most affected by the devastating occurrences of floods or droughts.

Most importantly, the lessons learned during this collaboration helped researchers to achieve similar improvements in application performance of other computational tools widely used for weather forecasting by the climate modeling community, and as such have resulted in far-reaching impacts for the climate modeling research at Harvard and beyond. Examples of such computer codes include the System of Atmospheric Modeling (SAM) and CM1 (a computer program used for atmospheric research).

Notable Publications and Presentations Resulting from this Work

Publications:

- Torri G., D. Ma, Z. Kuang, Stable water isotopes and large-scale vertical motions in the tropics, J. Geophys. Res, 122, 3703-3717, (2017).

- Gentine P., Garelli A., Park S., Nie J. ,Torri G., Kuang Z., Role of surface heat fluxes underneath cold pools, Geophys. Res. Letters, 43, doi:10.1002/2015GL067262, (2016).

- Torri G, Kuang Z., Rain evaporation and moist patches in tropical boundary layers, Geophysical Research Letters, 43, doi:10.1002/2016GL070893, (2016).

- Torri, G. and Z. Kuang, A Lagrangian study of precipitation-driven downdrafts, J. Atmos. Sci., 3, 839-854, (2016).

Presentations:

- Investigating cold pool dynamics with a Lagrangian perspective, 32nd Conference on Hurricanes and Tropical Meteorology, Puerto Rico, 2016.

Supporting Media

Dr. Giuseppe Torri (Postdoctoral Fellow in Earth and Planetary Sciences)

Dr. Giuseppe Torri (Postdoctoral Fellow in Earth and Planetary Sciences)

Dr. Giuseppe Torri (Postdoctoral Fellow in Earth and Planetary Sciences)

Dr. Giuseppe Torri (Postdoctoral Fellow in Earth and Planetary Sciences)

Dr. Plamen G. Krastev (Harvard University ACI-REF)

Dr. Plamen G. Krastev (Harvard University ACI-REF)

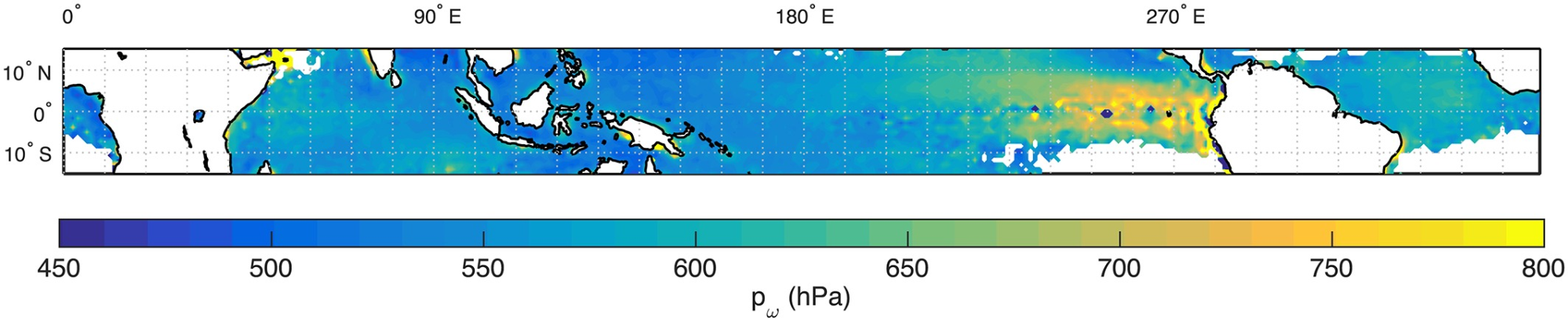

Figure 1: An example of visualization of convection patterns over tropical oceans based on models of Harvard’s researchers (Image courtesy of Journal of Geophysical Research: Atmospheres)

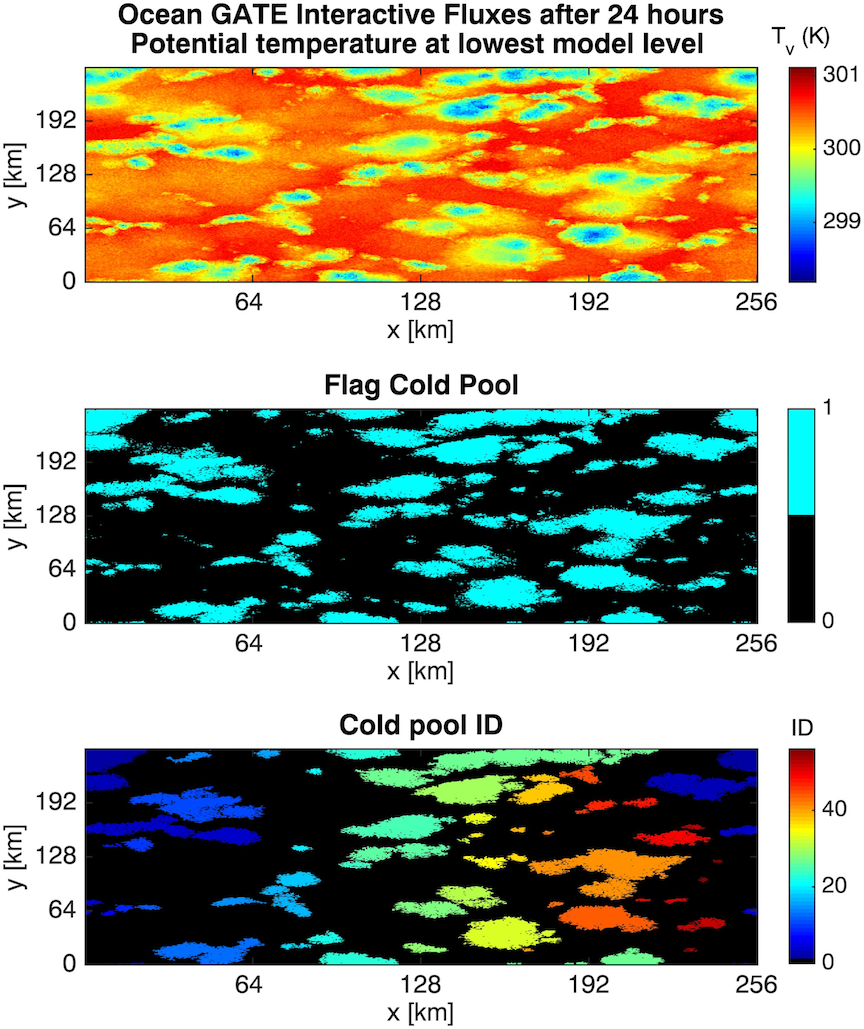

Figure 2: Example visualization of simulation data from Kuang’s research group (Image courtesy of Geophysical Research Letters)

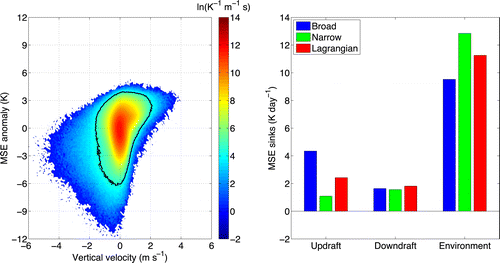

Figure 3: An example of visualization of simulated Lagrangian particles generated by one of the computational models designed by Harvard University researchers (Image courtesy American Meteorological Society)

Collaborators and Resources

Collaborators:

- Zhiming Kuang, Professor, Department of Earth and Planetary Sciences, Harvard University

- Giuseppe Torri, Ph.D., Postdoctoral Fellow, Department of Earth and Planetary Sciences, Harvard University

- Plamen Krastev, Ph.D., ACI-REF, FAS Research Computing, Harvard University

Resources:

- Harvard University High Performance Computing Environment, Odyssey

Funding Sources

- National Science Foundation under contracts AGS‐1062016 and AGS‐1260380

- Department of Energy under contracts DE-SC0008720 and DE‐SC0008679

- National Science Foundation, NSF ACI-1341935 Advanced Cyberinfrastructure – Research and Educational Facilitation: Campus-Based Computational Research Support