-

Transitioning from PBS to SLURM (Part I)

USC High-Performance Computing recently transitioned from PBS Torque/Moab to Slurm. The transition has gone surprisingly well, and I wanted to blog a little about what we did as ACI-REFs to prepare for it. The transition took place during the week of March 19th. By early January, the lead Slurm administrator had set up a representative…

-

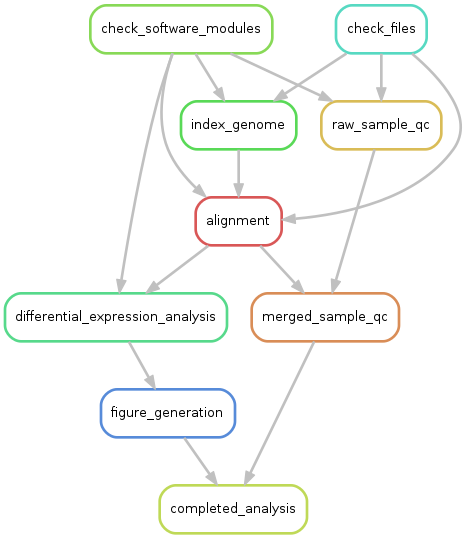

Evaluating Work Flow Management Tools

Brett Milash, Center for High Performance Computing, University of Utah One of my roles at the University of Utah involves supporting scientists developing computing work flows on the computer clusters here at the Center for High Performance Computing. Toward this goal I am evaluating a number of the countless work flow management tools out…

-

Keep Calm and Install Software

At the Center for High Throughput Computing at UW – Madison, we don’t install software system-wide for specific researchers, except for common, well-used system libraries. Instead, we recommend that individual researchers download or build a copy of their desired software locally. In this post, I’ll talk about why we do this, how it works, and…

-

Science Gateways Institute Conference 2017

I just attended the Science Gateways Community Institute 2017 Conference in Ann Arbor, MI and had a great time learning about the variety of resources available to researchers and developers alike. If you are not familiar with what a science gateway is the basic definition is: a tool that allows science & engineering communities to…

-

Part 2: Creating a workshop on Data Mining

Linh Ngo Clemson University After the first workshop on Data Mining was offered, we have had several follow-up appointments from participants of the workshop. While majority of these meeting involved clarifying details from the workshop, several of them were about techniques to developing specific data mining scenarios in actual research work, which were not covered…

-

Part 1: Creating a workshop on Data Mining

Linh Ngo Clemson University By Spring 2017, the Cyberinfrastructure and Technology Integration (CITI) Department at Clemson University has offered a range of introductory research computing workshops. Contents covered by these workshops include Linux, Github, Python, R, Hadoop MapReduce, and Apache Spark. However, post-workshop surveys indicate that participants want to have access to more advanced workshops.…

-

ACI-REF Virtual Residency 2017 – A First-Timer’s Experience

Cyd Burrows-Schilling University of California, San Diego The third ACI-REF Virtual Residency (VR) gathered again at the University of Oklahoma (OU), Norman, campus this year from Sunday, July 30 through Friday, August 4, 2017. Being relatively new to the field of research facilitation (just 1 year in and loving it!), I was eager to participate…

-

PEARC ’17

Last month, I attended the inaugural PEARC17 conference in New Orleans, Louisiana. PEARC (Practice & Experience in Advanced Research Computing) was created by merging conferences for communities that support and benefit from research computing. As a result, there was a great variety in the types of sessions offered.

-

The deployment of an application on the Open Science Grid (OSG)

A few months ago a biology group at the University of Utah contacted the Center for High-Performance Computing (CHPC). Their goal was to predict the MS/MS spectra for a set of molecules (#:230,737). In a first step, I installed the CFM-ID code and its dependencies (vide infra). On our Ember cluster (Intel(R) Xeon(R) CPU X5660…

-

GPU Tech Conference

Quick entry. My colleague Cesar and I from USC High-Performance Computing attended the GPU Technology Conference in San Jose, California yesterday (www.gputechconf.com). I read that 5,000 attended last year and Nvidia, the conference host, expects a record number this year. The focus was Nvidia’s GPU (Graphical Processing Unit) technology and applications. Deep Learning: If I…