-

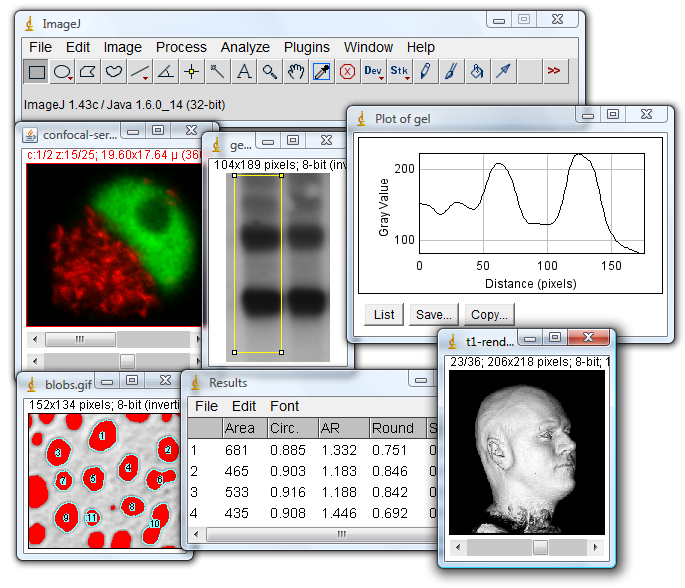

Using the Grid to Understand Crops

UW botanist harnesses the grid to illuminate crop growth Spalding’s Birge Hall lab is pretty far removed from conventional dirt-under-the-nails botany. In one room, a row of stationary cameras bathed in red light train in on Petri dishes that contain seedlings growing in a gel. These cameras take pictures every two minutes, producing rich time-lapse…

-

Parallel program solutions for high energy physics

“I work in high energy theoretical particle physics. Specifically, I investigate physics beyond the Standard Model with a focus on dark matter implications. My research often requires scans of models that have very large numbers of parameters. This work could not be completed without the computing resources provided at CHPC. Almost as valuable as the…

-

Facilitating data transfer

Genomics research is one of the largest drivers in generating Big Data for science, with the potential to equal, if not surpass, the data output of the particle physics community. Like physicists, university-based life-science researchers must collaborate with counterparts and access data repositories across the nation and globe. The National Center for Biotechnology Information (NCBI)…

-

Computational transition in chemistry

Our group started using the CHPC resources intensively almost three years ago. We were guided by the CHPC staff through their available resources for chemistry, as well as through some basic aspects of Gaussian, a major program used for molecular computations, and Schrödinger’s MacroModel for conformational analysis. We initially obtained a temporary allocation, which was…

-

Big data and Alzheimer’s detection

Campus big data project may point the way to Alzheimer’s early detection The team uses the Center for High Throughput Computing (CHTC), supported by UW-Madison and the Morgridge Institue, to segment MRI brain images that in some cases can take 12-24 hours for a single brain. “For many hundreds of brain scans, we need a…

-

Video processing on HPC

Currently, all users of a special microscope that generates terabytes of data must use a Windows workstation for data processing. Bob adapted this PC workflow to Harvard’s HPC environment; this now provides flexibility & higher throughput for the lab’s image processing needs. “To help us start tackling this challenge, we greatly benefited from the help…

-

Modeling cloud systems

“My research group recently became interested in using a program called VAPOR (www.vapor.ucar.edu) to help us easily and interactively visualize the results of large numerical simulations of cloud systems. We wanted to find a way to run VAPOR on one of our CHPC nodes and display the GUI remotely. That way we would need only…

-

Hawaii cluster online

The University of Hawaii ITS Advanced Computing Cluster launched on Tuesday, April 14, 2015. See the announcement here

-

HPC bioinformatics and transcriptomics

“I am a sixth year graduate student in the Department of Organismic and Evolutionary Biology. I started a transcriptomics project with little experience in coding and no experience in high powered computing (HPC). Without Bob Freeman’s work through ACI-REF I do not think I would have been able to complete my bioinformatics project. I was…

-

Connecting researchers and compute resources

Advanced Computing Initiative helps UW-Madison researchers sift and winnow data The Advanced Computing Initiative (ACI) links researchers and computing resources to maximize productivity.